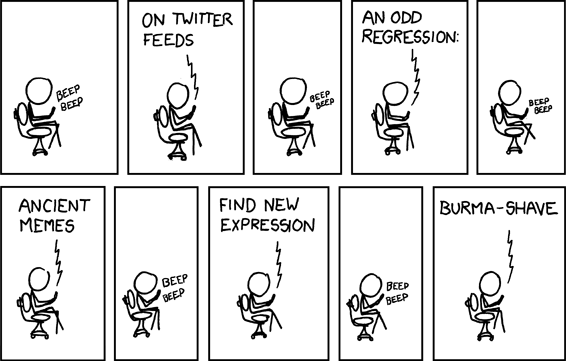

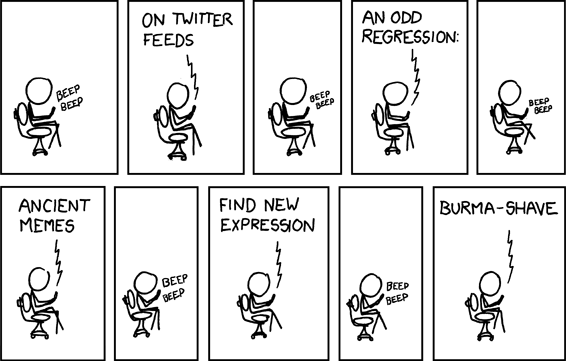

So, yeah: there was this thing in Texas, and well:

TWITTER

That pretty much covers it. Maybe it's alive, maybe it's dead; but you can be effin' sure that it's Twitter.

I've been conducting my own Twitter experiment for a little over four months now and I've learned a lot of things (and yes, SOMEWHERE on this page is the hidden link that will let you share in the joy of my micro-blogged arteries).

The foremost point: like just about everyone else who has tried it, I like it. After getting a good group of people to subscribe to, the feed is easy and addictive to read. I'm not sure if folks enjoy my posting or not (I have at least 15 or so non-spam followers) but I imagine the readership is somewhat like this blog--a bit random, perhaps sparse, and yet strangely, half-dedicated at the same time. Posting is fun, easy, and provides opportunity for a bit of silly, 140-character word play.

I already expounded upon

some of the semiotic ramifications of a 140-character communication medium, so I won't repeat that here. Also, just about anyone who knows anything about the Internet, and who likes to tell you what they know about the Internet, will tell you how important Twitter is to the Internet. So, I think I'll leave that one well enough alone too.

But here's something you might not have heard: there are many, many people who have no idea what Twitter is.

These are people who blog. These are people who can find the best gay club in a new city with Google and a quick twitch of the wrist (well, at least one of them can). And these are people in the publishing industry. At a dinner party a few weeks ago, I off-handedly mentioned Twitter, and this group of six mid-twenty year-old's did not know what I was talking about. Half said, "what's a Twitter?" and the other half said, "so what is that, anyway?"

I have no reason to thinking poorly of these people; after all, at the time I was only three months ahead of them in the Twitter-verse. But this is something I believe many 'Net-Theorists are not fully-recognizing: this revolution in communication going on is only among a vanguard.

So, one might argue, has every new chapter in this new internet saga. Throughout the last twenty years, the Internet has met the different members of the human race one by one, and in different times and places. Some have yet to make its acquaintance. And every single one came into the fold at a different stage in the game.

I'm not a technology guru. I understand the technology behind the Internet and its means of transmission and its interfaces only marginally better than the average person, and certainly not well enough to operate any of them as anything more than a user (though I am currently enrolled in the

Computer Programming course at Wikiuniversity!)

But what I do understand, and actually have some professional and theoretical training in regards to, is methods of communication, language, and human psychology. I am seeing something occur here--and although you may have heard this about 100 times in the past year (and 20 of them since SXSW), I believe it is true: this is something that has never happened before.

One can talk about the digital revolution through any number of metaphors relating to other advances in information technology in the past several millennia. I'm not going to. I'd rather talk about what we are doing now.

And this is it: we are conflating our language with our method of transmission. In other words (duck your head while the theory comes past),

we have seized our means of communication by creating a productive unity between the product (meaning/symbol) and the production (expression/transmission).

Or rather, we are seizing it. Not all have done so, and those who have begun are not nearly finished, even if there was such a final state.

Let me say it over again, but simpler, because I really think this is an important point.

The method of digital production (the networked computers and communication lines we commonly refer to as the Internet) has dropped the cost of producing, distributing, and consuming information to practically nothing. Furthermore, along with the spread of end-user information such as news and media, comes the spread of the knowledge of how to manipulate, and

further seize and shape the technology of digital production. I can learn programming on the Internet for free (or at least in theory--as I have yet to actually do so). A real example: a child of nine in Southeast Asia can become a certified Help Desk technician. Another example: a person using the Internet can invent, create, and promote their own communication client, with little-to-no actual investment of materials used, other than the computer they already had.

Open-source, APIs, and so forth. But here is the other interesting part: through word of mouth, networking, and good old trial and error, one Internet user's programming project becomes an Internet start-up. This start-up becomes a business. This business project changes the way the entire world communicates. One does not change the relations of production by oneself, but does so in concert with a dedicated and involved group: a network. Crazy, no?

None of this is news, really. But it's still pretty awe inspiring when you step back and look at it. Back in the old days, inventors used to die broke, sick, and alone. They were persecuted by the Church, and the children in the village would hurl fruit at them when they would step outside to rake the gravel outside their hut. These days all you have to do is read a lot, practice, and then you sell your craft project to VC, move to California, and write your own biography. Crazy!

Here's what's really crazy: the pace has sped up for the users as well. They are part of the network; they are both the means of production as well as party to the relations of production, and they work uncontrolled by any boss. Therefore, mass adoption is only possible when the masses themselves undergo a revolution in production. But even though a critical mass may be joining a particular revolution in production, they are leaving people behind. And this is totally okay, but strange, in the face of the pace of technology. Most people don't even know how Twitter works, let alone why its good. But now, simply knowing what Twitter is, and updating your status isn't enough. You have to use a good client, with search capacities, TwitPic, and a URL-shrinker all combined. You have to Trend. And you can't even just Trend! This past weekend one had to construct crazy Boolean search constraints just to find out where the tacos where at. Just wait until you have to write your own App on the fly at an event to handle the ever evolving and expanding data stream pouring out of the API.

Try explaining #SXSW to someone who doesn't use Twitter, but only uses Facebook. It barely makes sense to someone who hasn't shifted their means of production along with the revolution in the network. It's not enough to know how to use the Internet--you have to own the interface. Communication is not a matter of being about to read, or even operating a card catalog. Ever try to explain how to Google something to a person unfamiliar with a search engine? You have to be a skilled operator of the means of communication to be able to communicate. Pretty soon you might have to have a Library Science degree just to figure out what you're missing.

This is not simply literacy. This is an ability to critically think on the fly--to creatively craft information and symbols, and interpret, in a constant productive and consumptive process. The old Rhetoric and Speech classes of yesteryear will be replaced with Reg. Ex. and Javascript.

I'm looking at my browser window right now. Firefox. I have five tabs open. One is my iGoogle page, with news, RSS Reader, Twitter, and Email widgets all included. I'm glad I can fit them all into one page. I have my blog edit screen open, two half-read articles, and another blog post open for reference. I have a full bookmark bar at the top, but I hardly use these anymore. I have Javascripts for Google Notebook to handle my evolving collection of links and notes. I have Javascript button to subscribe to an RSS feed, and a button to post a page to my Shared Item page (part of Google Reader). I also have a link to my off-line TiddlyWiki, where I am compiling notes for a writing project. I play mp3s through FoxyTunes, and I have just installed Ubiquity, so I can jump to certain tasks like Twitter or Email with a hotkey and a typed command.

And with this personalized Interface, I am barely keeping up! Several of my RSS feeds are devoted to sources to keep me abreast of the new formats and apps reaching the market. I have to know what's going on, just to maintain the struggle to know what's going on!

This concept, the ongoing technological revolution in means and relations of production, is whispering to me. It is whispering to me about a future for the Internet (cough and head for the exits, its prophet-brain-dump time!):

[

starry-eyed, swirly, white-out fantasy bells...]

Twitter will meet Ubiquity or another semantic web program half-way. Using some bastardization of Javascript, mutated by downloaded user-customized commands and uniquely-hacked libraries, Internet users will message, email, search, read, and archive data using these complex 140-character commands. Most of the text sent back and forth between users will not resemble written speech--it will be a hybrid of scripts and links, slangs and references. There will be pockets of written text at fixed URLs--the remnants of today's blogs and wikis. Everything will be accessible with XML or some derivative (though probably not fully "semanticized"), so it can be searched, compiled, subscribed, shared, and archived via the TwitScripts. "Privacy", in terms of personal data, will break down as a concept. You will not have a password--you will have a registered device. You will log on to a single user-name (perhaps a seperate for business) and launch your TwitScripts from an open and readable timeline. The data must flow...

[

...trauma-inducing crash back to reality.]

But this is only one possible future, for certain users who are adept and interested enough to learn TwitScript. For those who are not interested in this particular technological revolution, other interfaces will become popular. For instance, there is also the MySpace future.

MySpace is AOL. There--I said it. It is a portal to media content. To be fair, the user-generated element of MySpace, YouTube, and Facebook are much more interesting (due to their variously-shifting standards of user-control) than AOL's portal ever was. But the goal is the same--a controlled (and heavily advertised) environment in which users can log on and roam about, never having to learn anything new or create an interface from scratch. Profiles, skins, centralized app access--it's the trading card game of the web.

But this appeals to certain people--especially young people. It is easy, and the community is ready-made. Sign on, and join your school first. Then, form other groups from that. This will be the media-tized Internet of the future. Note: not the future of Internet media, but the future of the Internet as a media channel. Imagine--Microsoft buys MySpace or Facebook, make a few connections and software upgrades, and all of sudden the Xbox is the WebTV kids actually want. For people who are not interested in learning the means of distribution, these media portals are the perfect product.

And then there is the regular old Internet as well. I think of a guy I know at work--he loves Craigslist. He struggles with Mapquest, but is on Craigslist almost every day, looking for deals. He has that one interface down, but doesn't have a need to learn anything else. There are people who are the same with eBay, or their favorite news/discussion sites. Or even just Google--find my movie times, and I'm out. The capacity of the user to learn the means of communication dictates how far he or she will choose to go, and more importantly, how far the technology will go with them.

My TwitScript conception of the future (for the record, this term is, along with the rest of this blog, under CC license as of now [is he joking? or serious?]) is the direction in which I imagine those pushing the limits of the technology will take it. To deal with the increasing amount of information available in protean distribution formats, we will need to become literate in the mechanics of information distribution--this includes text mark-up languages, browser mechanics, and the new consumer info "packet": the Tweet (

more about the power of the 140-character set in that earlier post I alluded to). The line between the cutting edge users and the programmers will diminish, just because of the rapidity of the pace. The designers will be the beta testers, the early adopters, and the constituency. They will be the only one's that matter, from the Twitter-verse's perspective. Think about it: when you are Twittering, what else really matters? You are communicating with other users, for other users.

Here is a post by Tim O'Reilly (

@timoreilly) for a day or so ago:

RT @elisabethrobson: Interesting stats from the iPhone 3.0 preview yesterday: (via @iphoneschool) http://cli.gs/nL1yJ5 #iphone

Look how far we are already! Only 85 characters of this are actually readable text! The rest are hyperlinks, short-hand, tags, and citations. And in fact, because it is a Re-Tweet, none of it is his original words. This is an index; it points in a direction through the network, distributing information even though substantially, it itself says almost nothing. And it doesn't need to, because by utilizing the method of internet indication (the hyperlink) Tim is giving us more information than 140 characters ever could. He is linking us into the network, and insinuating us into a pattern of unlimited knowledge in a yet reasonable and understandable gesture.

I promised that I actually had some theory for you regarding semiotics and psychology, or other such nonsense. If you are not interested in such things, feel free to skip out now, taking the conclusion: Twitter is the beginning of a revolution in the means of communication, to a conflation of content and expression. But, for those who read my Marx-between-the-lines, and desire more, here is a deliciously (or perhaps annoyingly, depending on your preferences) difficult description:

The signifier, as the point of expression for meaning (the signified), has been receiving an altogether privileged place in our understanding of language. Whether it be the Holy Word, the unattainable signifier of Lacan, or even the juridico-discursive power of the "I" point in modern testimony, the moment and form of expression (I think therefore...) is seen to be the cutting-edge of the language tool.

While this signifier is hardly diminishing in its psychological position (consciousness demands a position for the "I"), our evolving technology of expression is reducing its sacred position over the signifier/signified duality. The psyche, as a technological realm of semiotic expression, is not in itself shifting; but in our current relations of production, in which our minds are interfacing with digital networks, we are ironically becoming "unwired" from our binary (the basic two digits) understanding of our own communication. We are not just signifying now, we are manipulating the way we signify as part of the signification.

The role of the author is shifting. The power of attribution to a fixed, historical "I" is less important than the information to be understood. Understanding, and hence, expression, is less reliant on the signifier as a perfect concept of content production. Misspellings are common, and ignored. If anyone is asked, of course the signifier still plays a role, but as the signifer grows in scope to encompass not only the privileged identity between word and speaker, but also between a choice in language, distribution network, semantics, time, and distribution, the signifier is becoming more meaningful as a material object. We are bringing the signifier back down to earth, muddying it with the effluviance of the signified phenomena, and enacting a phenomenological semiotic, rather than a formal (Platonic, Hegelian, etc) semiotic. To appropriate Merleau-Ponty, our words are again made flesh. To appropriate Marx, our commodities are returned to the realm of production and use-value. To appropriate Freud, our fetishes are no longer abstracted neuroses of our unconscious investments, but properly sublimated transferences: well-oiled psychic machinery.

When we type hypertext, we are not only indicating, we are expressing the act of indication. This is not only "something to see", but "something I want you do see". Please click on this. The signifer now has supplementary value as a signified. The signified and signifier meet again, not through a reduction of the difference, but by a meeting of the two aspects in a properly material plain--abstraction is conquered (aufhebung alert!) by a reevaluation and redeployment of the means of this semiotic production: scripting is a proletarian consciousness of digital writing.

Now: the sense in which the two terms "signifier" and "signified" are used shift between every philosopher's iteration, and even within each author use (somebody should be able to say something significant about that). To draw out this complicated dynamic and really treat the two terms fairly, I could read you the entirety of Of Grammatology, but I think we would all be relieved if I did not. (And certainly Derrida's own confusing play with the shifting meaning of these two words are indicative of his own philosophy. I imagine one could agree with that statement whether one appreciates him or not!)

However, I will simply Derrida a bit, to close my thoughts for now. With the caveat, of course, that my use of the two terms here are not exactly the same as his--but I believe the point holds true for both of us.

However, I will simply Derrida a bit, to close my thoughts for now. With the caveat, of course, that my use of the two terms here are not exactly the same as his--but I believe the point holds true for both of us.

If, we wish to push our ability to write and express meaning beyond our current means, we must seek to unravel, and perhaps "de-construct" the nature of our current system. I cringe while saying so, but we must "hack" our language. Perhaps "script" is a better verb, not sounding quite as cliche, and closer my idea of what we should actually be doing: using and adapting pieces of our language as a material code for better interfacing with our language. A book is an excellent material technology, but we cannot use a book as our model of communication after considering our new, and vastly more "scripted" material technologies of signification:

"The good writing has therefore always been comprehended. [...] Comprehended, therefore, within a totality, and enveloped in a volume or a book. The idea of the book is the idea of a totality, finite or infinite, or the signifier; this totality of the signifier cannot be a totality unless a totality constituted by the [material] signified preexists it, supervises its inscriptions and its signs, and is independent of it in its ideality. The idea of the book, which always refers to a natural totality, is profoundly alien to the sense of writing. It is the encyclopedic protection of theology and of logocentrism against the disruption of writing, agains its aphoristic energy, and, as I shall specify later, against difference in general. If I distinguish this [un-totalistic, scriptable, material] text from the book, I shall say that the destruction of the book, as it is now under way in all domains, denudes the surface of the text. That necessary violence responds to a violence that was no less necessary."

-Derrida, Of Grammatology, "The Signifier and Truth"